Azure Data Engineer Associate Certification C ...

- 2k Enrolled Learners

- Weekend

- Live Class

Big Data, haven’t you heard this term before? I am sure you have. In the last 4 to 5 years, everyone is talking about Big Data. But do you really know what exactly is Big Data, how is it making an impact on our lives & why organizations are hunting for professionals with Big Data skills? In this Big Data Tutorial, I will give you a complete insight into Big Data. For further details, refer to the Big Data Course.

Below are the topics which I will cover in this Big Data Tutorial:

Let me start this Big Data Tutorial with a short story.

In ancient days, people used to travel from one village to another village on a horse driven cart, but as the time passed, villages became towns and people spread out. The distance to travel from one town to the other town also increased. So, it became a problem to travel between towns, along with the luggage. Out of the blue, one smart fella suggested, we should groom and feed a horse more, to solve this problem. When I look at this solution, it is not that bad, but do you think a horse can become an elephant? I don’t think so. Another smart guy said, instead of 1 horse pulling the cart, let us have 4 horses to pull the same cart. What do you guys think of this solution? I think it is a fantastic solution. Now, people can travel large distances in less time and even carry more luggage.

The same concept applies on Big Data. Big Data says, till today, we were okay with storing the data into our servers because the volume of the data was pretty limited, and the amount of time to process this data was also okay. But now in this current technological world, the data is growing too fast and people are relying on the data a lot of times. Also the speed at which the data is growing, it is becoming impossible to store the data into any server.

Through this blog on Big Data Tutorial, let us explore the sources of Big Data, which the traditional systems are failing to store and process. You can even check out the details of Big Data with the Data Engineer Course.

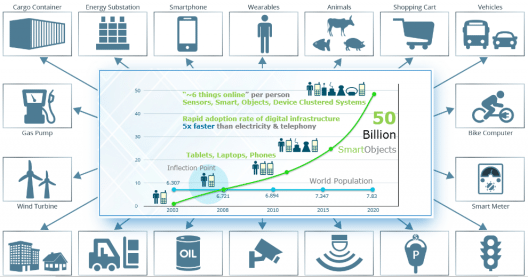

The quantity of data on planet earth is growing exponentially for many reasons. Various sources and our day to day activities generates lots of data. With the invent of the web, the whole world has gone online, every single thing we do leaves a digital trace. With the smart objects going online, the data growth rate has increased rapidly. The major sources of Big Data are social media sites, sensor networks, digital images/videos, cell phones, purchase transaction records, web logs, medical records, archives, military surveillance, eCommerce, complex scientific research and so on. All these information amounts to around some Quintillion bytes of data. By 2020, the data volumes will be around 40 Zettabytes which is equivalent to adding every single grain of sand on the planet multiplied by seventy-five.

Get an in-depth understanding of Big Data and its concepts in the Big Data Hadoop Certification.

Big Data is a term used for a collection of data sets that are large and complex, which is difficult to store and process using available database management tools or traditional data processing applications. The challenge includes capturing, curating, storing, searching, sharing, transferring, analyzing and visualization of this data.

The five characteristics that define Big Data are: Volume, Velocity, Variety, Veracity and Value.

Volume refers to the ‘amount of data’, which is growing day by day at a very fast pace. The size of data generated by humans, machines and their interactions on social media itself is massive. Researchers have predicted that 40 Zettabytes (40,000 Exabytes) will be generated by 2020, which is an increase of 300 times from 2005.

Velocity is defined as the pace at which different sources generate the data every day. This flow of data is massive and continuous. There are 1.03 billion Daily Active Users (Facebook DAU) on Mobile as of now, which is an increase of 22% year-over-year. This shows how fast the number of users are growing on social media and how fast the data is getting generated daily. If you are able to handle the velocity, you will be able to generate insights and take decisions based on real-time data.

As there are many sources which are contributing to Big Data, the type of data they are generating is different. It can be structured, semi-structured or unstructured. Hence, there is a variety of data which is getting generated every day. Earlier, we used to get the data from excel and databases, now the data are coming in the form of images, audios, videos, sensor data etc. as shown in below image. Hence, this variety of unstructured data creates problems in capturing, storage, mining and analyzing the data.

Veracity refers to the data in doubt or uncertainty of data available due to data inconsistency and incompleteness. In the image below, you can see that few values are missing in the table. Also, a few values are hard to accept, for example – 15000 minimum value in the 3rd row, it is not possible. This inconsistency and incompleteness is Veracity.

Data available can sometimes get messy and maybe difficult to trust. With many forms of big data, quality and accuracy are difficult to control like Twitter posts with hashtags, abbreviations, typos and colloquial speech. The volume is often the reason behind for the lack of quality and accuracy in the data.

Data available can sometimes get messy and maybe difficult to trust. With many forms of big data, quality and accuracy are difficult to control like Twitter posts with hashtags, abbreviations, typos and colloquial speech. The volume is often the reason behind for the lack of quality and accuracy in the data.

After discussing Volume, Velocity, Variety and Veracity, there is another V that should be taken into account when looking at Big Data i.e. Value. It is all well and good to have access to big data but unless we can turn it into value it is useless. By turning it into value I mean, Is it adding to the benefits of the organizations who are analyzing big data? Is the organization working on Big Data achieving high ROI (Return On Investment)? Unless, it adds to their profits by working on Big Data, it is useless.

Go through our Big Data video below to know more about Big Data:

As discussed in Variety, there are different types of data which is getting generated every day. So, let us now understand the types of data:

Big Data could be of three types:

The data that can be stored and processed in a fixed format is called as Structured Data. Data stored in a relational database management system (RDBMS) is one example of ‘structured’ data. It is easy to process structured data as it has a fixed schema. Structured Query Language (SQL) is often used to manage such kind of Data.

Semi-Structured Data is a type of data which does not have a formal structure of a data model, i.e. a table definition in a relational DBMS, but nevertheless it has some organizational properties like tags and other markers to separate semantic elements that makes it easier to analyze. XML files or JSON documents are examples of semi-structured data.

The data which have unknown form and cannot be stored in RDBMS and cannot be analyzed unless it is transformed into a structured format is called as unstructured data. Text Files and multimedia contents like images, audios, videos are example of unstructured data. The unstructured data is growing quicker than others, experts say that 80 percent of the data in an organization are unstructured.

Till now, I have just covered the introduction of Big Data. Furthermore, this Big Data tutorial talks about examples, applications and challenges in Big Data. You can even check out the details of Big Data with the Azure Data Engineering Training in Australia.

Daily we upload millions of bytes of data. 90 % of the world’s data has been created in last two years.

We cannot talk about data without talking about the people, people who are getting benefited by Big Data applications. Almost all the industries today are leveraging Big Data applications in one or the other way.

Someone has rightly said: “Not everything in the garden is Rosy!”. Till now in this Big Data tutorial, I have just shown you the rosy picture of Big Data. But if it was so easy to leverage Big data, don’t you think all the organizations would invest in it? Let me tell you upfront, that is not the case. There are several challenges which come along when you are working with Big Data.

Now that you are familiar with Big Data and its various features, the next section of this blog on Big Data Tutorial will shed some light on some of the major challenges faced by Big Data.

Let me tell you few challenges which come along with Big Data:

We have a savior to deal with Big Data challenges – its Hadoop. Hadoop is an open source, Java-based programming framework that supports the storage and processing of extremely large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation.

Hadoop with its distributed processing, handles large volumes of structured and unstructured data more efficiently than the traditional enterprise data warehouse. Hadoop makes it possible to run applications on systems with thousands of commodity hardware nodes, and to handle thousands of terabytes of data. Organizations are adopting Hadoop because it is an open source software and can run on commodity hardware (your personal computer). The initial cost savings are dramatic as commodity hardware is very cheap. As the organizational data increases, you need to add more & more commodity hardware on the fly to store it and hence, Hadoop proves to be economical. Additionally, Hadoop has a robust Apache community behind it that continues to contribute to its advancement.

As promised earlier, through this blog on Big Data Tutorial, I have given you the maximum insights in Big Data. This is the end of Big Data Tutorial. Now, the next step forward is to know and learn Hadoop. We have a series of Hadoop tutorial blogs which will give in detail knowledge of the complete Hadoop ecosystem.

Now that you have understood what is Big Data, check out the Big Data training in Chennai by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka’s Big Data Architect Course helps learners become expert in HDFS, Yarn, MapReduce, Pig, Hive, HBase, Oozie, Flume and Sqoop using real-time use cases on Retail, Social Media, Aviation, Tourism, Finance domain.

Related Posts:

10 Reasons Why Big Data Analytics is the Best Career Move

Rio Olympics 2016: Big Data powers the biggest sporting spectacle of the year!

| Course Name | Date | |

|---|---|---|

| Big Data Hadoop Certification Training Course | Class Starts on 11th February,2023 11th February SAT&SUN (Weekend Batch) | View Details |

| Big Data Hadoop Certification Training Course | Class Starts on 8th April,2023 8th April SAT&SUN (Weekend Batch) | View Details |

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co

Thank you EDUREKA.I gained a bit of knowledge about BIGDATA

Thank you for sharing Information About Bigdata Analytics

http://www.analyticspath.com/big-data-analytics-training-in-hyderabad

Nice article and the information provided.

https://www.exafluence.com/service/big-data-and-analytics

Thanks for this useful information worth reading

Hey Harathi, thank you for going through our blog. We are glad that you did find it useful. Cheers :)

thanks edureka!…

Glad to help, Vishnu! :)

Do browse through our other blogs and let us know how you liked it. Cheers :)

thanks for sharing this useful information worth reading this article keep on sharing

Thank you for going through our blog. We keep updating our blogs regularly. Do browse through our channel and let us know how you liked our other works. Cheers :)

Pleasure Folks :)